Editor: Fabien Locussol, Interconnect & Network Product Manager at Atos

RED-SEA builds upon the European interconnect BXI (Bull eXascale Interconnect) from Atos. BXI is already in production and featured in Top 500 systems. RED-SEA aims to enhance and extend it to meet the challenges of the Exascale era.

This page is presenting some benchmark results for BXI V2, the current generation of BXI.

It is an extract of a study performed by the “Application & Performance” Atos team, comparing the latest generation of interconnect product from Atos, BXI V2, to the Mellanox InfiniBand HDR100.

Tests have been done both on stability and performance. The same tests have been performed on an equivalent machine equipped with the InfiniBand HDR100 interconnect from Mellanox (configuration details provided further down).

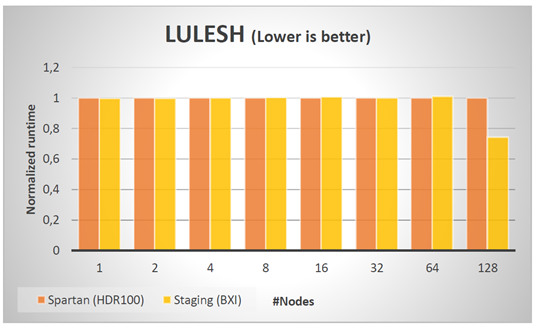

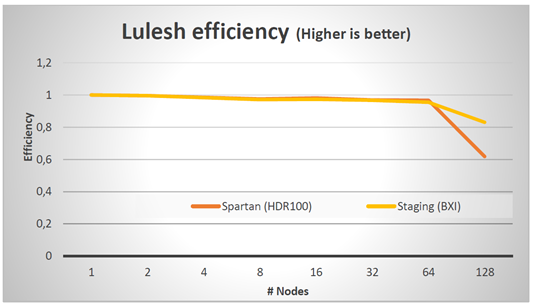

LULESH

The performance is similar on HDR100 and BXI until 64 nodes. But during run on 128 nodes, we observe an important gain of BXI over HDR100.

We can see the efficiency on Lulesh is very good until 64 nodes, and the performance falls down on 128 nodes. But it has less impact on BXI, which has a better efficiency than HDR100 at a large scale.

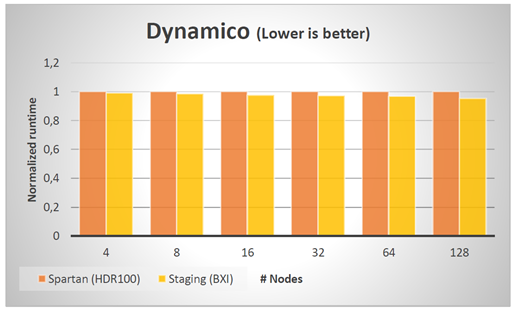

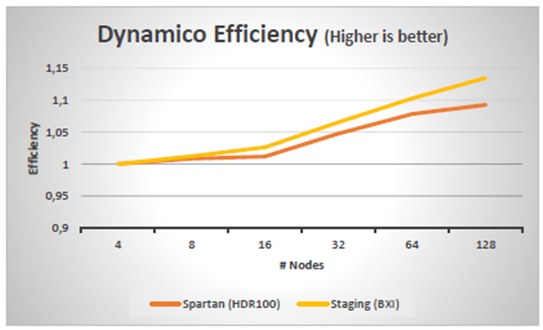

Dynamico

The Dynamico test case is big and allows to overscale due to a better usage of memory cache per core. This overscaling is greater on BXI than on HDR100.

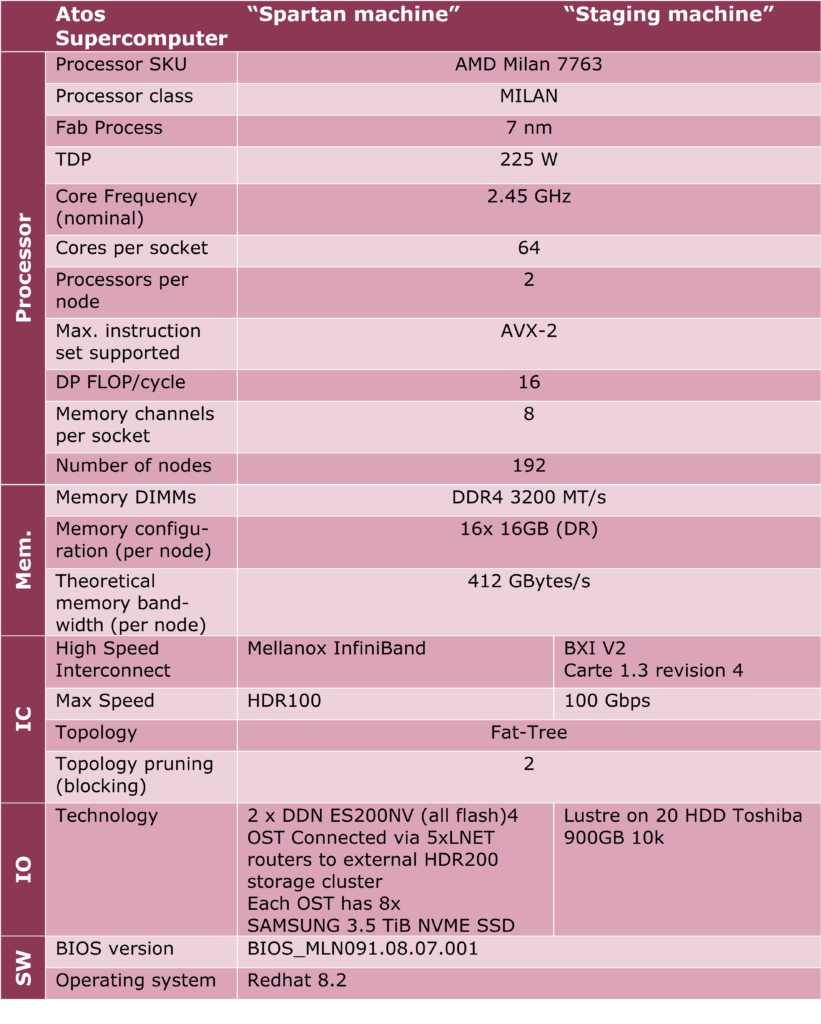

Configuration used

Benchmark environment

BXI V2 environment

To use the BXI environment, we must use the following lines:

source /opt/mpi/openmpi/4.1.0.0/bin/mpivars.sh intel

export

OMPI_MCA_mca_base_envar_file_prefix=/opt/mpi/openmpi/4.1.0.0/etc/profile/bxi_optimized.confThis allows to compile with mpicc, mpic++ and mpif90, all these wrappers use the Intel compiler (The Intel environment must be loaded beforehand with the version desired).

The Intel compiler version used was the 2020.4.304.

These two lines also set properly the environment for the execution.

The file /opt/mpi/openmpi/4.1.0.0/etc/profile/bxi_optimized.conf can be copied in another location and modified to change the behaviour of OpenMPI. It can be used to optimize the performance.

For all the benchmark we used these lines for the compilation and the execution.

InfiniBand HDR100 environment

For all benchmarks ran on the InfiniBand HDR100 platform, we used the library provided by Mellanox HPCX 2.7.0 compiled with the Intel compiler 2020.2.254.

These lines show how we loaded the environment for this platform:

INTEL_VERSION=2020.2.254

source

/opt/intel/compilers_and_libraries_${INTEL_VERSION}/linux/bin/compilervars

.sh intel64

HPCX_HOME=/home_nfs_robin_ib/bdardenf/lib/hpcx-v2.7.0-gcc-MLNX_OFED_LINUX-

5.1-0.6.6.0-redhat7.7-x86_64

source $HPCX_HOME/hpcx-init_intel${INTEL_VERSION}.sh

hpcx_load

For the compilation we used the same wrapper as the BXI V2 environment: mpicc, mpicc++ and mpif90.

The Intel compiler version used was 2020.2.254.

Benchmarking team

Alexis Puddu, Frédéric Darden, Cyril Mazauric