An Open-Source Integrated Solution to Address the Simulator Gap for Parallel Heterogeneous Systems

Authors: Nikolaos Tampouratzis (EXAPSYS), Yannis Papaefstathiou (EXAPSYS).

Nowadays, highly parallel and distributed computing systems (i.e., Cloud and HPC systems) are growing in capability at an extraordinary rate, incorporating processing systems that vary from simple microcontrollers to high performance units connected with each other through numerous networks. One of the main problems the designers of such systems face is the lack of simulation tools that can offer realistic insights beyond simple functional testing, such as the actual performance of the nodes, accurate overall system timing, power/energy estimations, and network deployment issues.

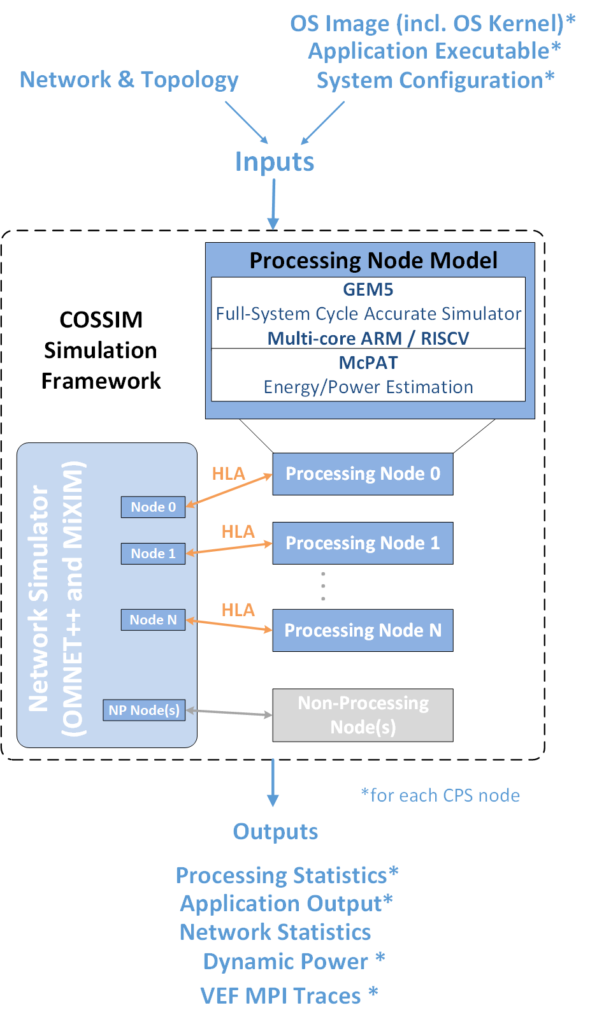

In order to address all the aforementioned limitations, we develop and extend in RED-SEA project the open-source COSSIM Simulation Framework. The proposed solution efficiently integrates a series of sub-tools that model the computing devices of the processing nodes as well as the network(s) utilized in the interconnections. It provides cycle accurate results throughout the system by simulating together: (a) the actual application and system software executed on each node; (b) the networks that are employed—COSSIM provides accurate performance/power/energy consumption estimates for both the processing elements and the network(s) based on the actual dynamic usage scenarios. Last but not least, COSSIM employs a standardized interconnection protocol between its sub-components (i.e., IEEE 1516.x HLA[1]); thus, it can be seamlessly connected to additional similar tools.

The main research challenges that have been addressed so as to create COSSIM, are the following: (a) Develop a novel synchronization scheme so as to support the notion of cycle accuracy throughout the sub-simulators; (b) add to the synchronization scheme the ability to tradeoff the simulation time against the required timing accuracy; (c) adapt the sub-simulators so as to be able to fully handle real network packets; (d) adapt the sub-simulators as well as the synchronization scheme so as to implement a novel fully distributed system where no critical task and/or sub-simulator is centralized so as to be able to efficiently handle the simulation of processing nodes interconnected with any available network technology.

Specifically, COSSIM is built on top of several well-established simulators:

- GEM5 [2], a state of the art full-system simulator, to simulate the digital components of each processing node in the simulated system

- OMNET++ [3], which is an established network simulator, to simulate the networking infrastructure

- McPAT to provide energy and power consumption estimations of the processing nodes and MiXIM (OMNET++ addon) to estimate the energy consumption of the network

- CERTI HLA architecture to bind the whole framework together

In addition, in the context of RED-SEA project, VEF-Prospector is successfully integrated in COSSIM simulator which captures the application MPI calls, and gathers them in trace files using a special format (i.e., VEF). Hence MPI traffic generated in COSSIM can be integrated within the SAURON simulator using VEF traces to simulate the SAURON network capabilities (e.g., BXI model).

Framework Features

Support of Multiple Architectures: The current version of COSSIM can support both Arm and RISCV multicore CPUs in a Full System mode (simulating full Ubuntu-based OS) including their peripherals using the latest gem5 version (v22.0.0.1).

Extended Functionality of Components: Each component of the framework has been extended to provide advanced synchronization mechanisms and establish a common notion of time between all simulated systems.

Usability: A unified Eclipse-based GUI has been developed to provide easy simulation set-up, execution and visualization of results.

Connectivity and Expansion: Through HLA and proper modifications to the basic components, COSSIM framework can be connected to other tools to enable simulation of devices and/or events. Finally, import VEF Prospector in COSSIM to obtain MPI traces which can be used in SAURON simulator.

How to configure the COSSIM MPI environment and get VEF-Traces?

In the following example we mount an Ubuntu image through QEMU emulator, compile a simple MPI application and configure the COSSIM environment for 3 nodes in order to get VEF Traces.

1. Mount the disk image

sudo mount -o loop,offset=65536 $HOME/COSSIM/kernels/disks/ubuntu-18.04-arm64-docker.img /mnt

cd /mntTo be noticed that the sudo code is: redsea1234

2. Copy the MPI application in Ubuntu simulated image

sudo cp /home/red-sea/Desktop/mpi_hello_world.c .3. Emulate the image through QEMU

sudo mount --bind /proc /mnt/proc

sudo mount --bind /dev /mnt/dev

sudo chroot .

echo "nameserver 8.8.8.8" > /etc/resolv.conf4. Compile the MPI application in Ubuntu simulated image

mpicc -o mpi_hello_world mpi_hello_world.c5. Create the hosts file in Ubuntu simulated image

vi /etc/hostsAdd the following lines in order to declare the IP of each node (this is an example of 3 nodes):

127.0.0.1 localhost

192.168.0.2 node0

192.168.0.3 node1

192.168.0.4 node26. Create a .rhosts file in Ubuntu simulated image

We need to create a .rhosts in the root home directory and write the hostnames of the hosts in order to access password-free

vi /root/.rhostsAdd the following lines:

node0 root

node1 root

node2 root7. Create a host_file in Ubuntu simulated image

We need to create a host_file in order to declare the MPI-ranks which will be executed per COSSIM node

vi host_fileAdd the following lines:

node0:1

node1:1

node2:18. Create Scripts in order to execute the MPI application with VEF Traces

The following 4 scripts are used in order to execute the MPI application with VEF traces

i) Setup the correct hostname in all gem5 nodes (through node0)

vi 1.setup_scriptAdd the following lines (3 nodes):

hostname node0 #declare the hostname for node0

rsh 192.168.0.3 hostname node1 #declare the hostname for node1

rsh 192.168.0.4 hostname node2 #declare the hostname for node2ii) Execute the MPI application with VEF support (through node0)

vi 2.vef_mpi_execution_scriptThis is an example for 3 nodes (1 MPI-rank per node):

#declare the vef prospector path

export PATH=$PATH:/opt/vef_prospector/bin/

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/vef_prospector/lib/

m5 resetstats #reset the gem5 statistics before mpi execution

vmpirun -launcher rsh -n 3 -f host_file ./mpi_hello_world #execute the app

m5 dumpstats #dump the gem5 statisticsiii) Gather the VEF traces from other nodes to node0

This is a script in order to gather the VEF traces from node1 and node2 to node0. It was necessary in order to be created the final trace file (.vef) through VEF mixer.

vi 3.get_vef_tracesAdd the following lines (3 nodes):

#declare the vef prospector path

export PATH=$PATH:/opt/vef_prospector/bin/

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/vef_prospector/lib/

#go to traces directory

cd /mpi_hello_world-*

#get traces from node1

rsh 192.168.0.3 cat /mpi_hello_world-/1.comm >> 1.comm

rsh 192.168.0.3 cat /mpi_hello_world-/1.veft >> 1.veft

#get traces from node2

rsh 192.168.0.4 cat /mpi_hello_world-/2.comm >> 2.comm

rsh 192.168.0.4 cat /mpi_hello_world-/2.veft >> 2.veft

#Execute vef_mixer

vef_mixer -i VEFT.main -o output_trace.vef

#read the traces

cat output_trace.vefiv) Terminate the gem5s (through node0)

vi 4.finalization_scriptAdd the following lines (3 nodes):

rsh 192.168.0.3 m5 exit & #terminate the gem5 node1 execution

rsh 192.168.0.4 m5 exit & #terminate the gem5 node2 execution9. Umount the disk image

exit

cd

sudo umount /mnt/proc

sudo umount /mnt/dev

sudo umount /mntFurther resources

Tutorial demo

This is a LAMMPS benchmark execution in COSSIM framework with VEF traces production using Arm processors for the RED-SEA project.

GitHub

Access RED-SEA-modified version of COSSIM via github: https://github.com/ntampouratzis/RED-SEA

References

[1] IEEE 1516.x: Standard for Modeling and Simulation High Level Architecture (HLA).

[2] GEM5 2023. The GEM5 Simulator. Retrieved from http://gem5.org/.

[3] OMNET 2023. OMNET++ Discrete Event Simulator. Retrieved from https://omnetpp.org/.